Table of Contents

What is Kafka Exactly Once Semantics?

A distributed publish-subscribe messaging system’s computers can always fail independently of one another. When a producer is sending a message to a topic in Kafka, an individual broker or a network failure may occur. Depending on the producer’s reaction to a failure, different semantics can be derived. Even if a producer sends a message multiple times, the message is only sent to the end-user once. Semantics is the most wanted guarantee, but it is also the least understood at the same time. Because it involves collaboration between the messaging system and the application that creates and consumes the messages, this is the case.

Importance of Kafka Exactly Once Semantics

Every written message will be persisted at least once, without any data loss, thanks to Exactly Once Semantics. It’s useful when it’s fine-tuned for dependability. While doing so, the producer retries, resulting in a duplicate in the stream.

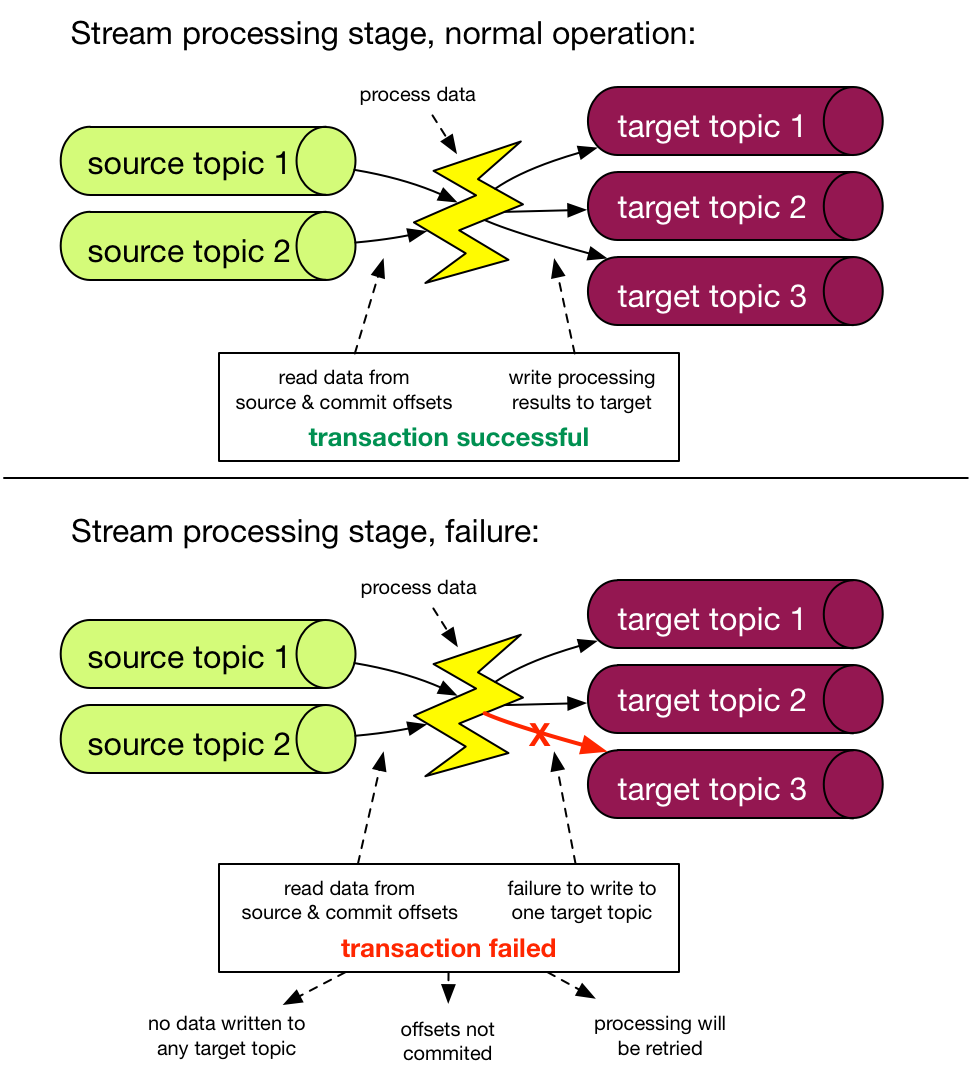

Although the Exactly Once Semantics allow for a sophisticated messaging system, it does not allow for many TopicPartitions. To do so, you’ll require transactional guarantees, or the ability to atomically write to multiple TopicPartitions. Atomicity refers to the capacity to commit a group of messages across TopicPartitions as a single entity, whether all messaging committees are involved or none at all.

What is an Idempotent Producer?

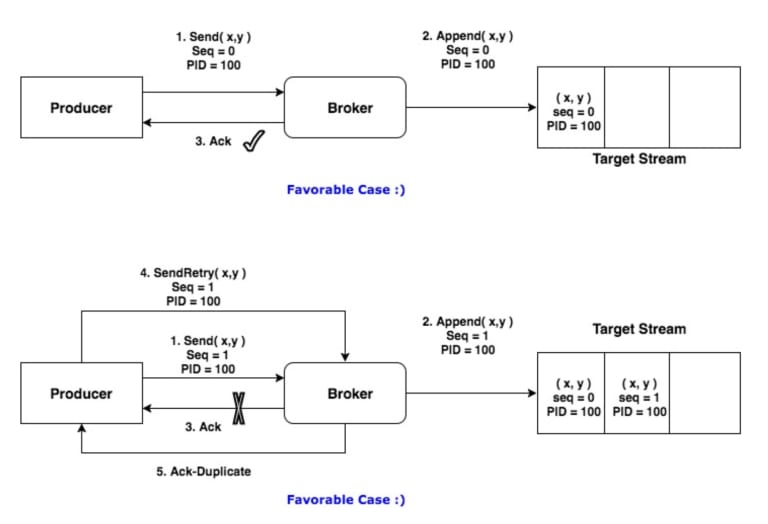

The second name of Kafka’s Exactly Once Semantics is idempotency. To prevent a message from being processed multiple times, it must only be stored to a Kafka topic once. The producer is given a unique ID at initialization, which is known as the producer ID or PID.

The message is bundled with a PID and a sequence number and sent to the broker. A Broker will only accept a message if the sequence number is exactly one more than the previous committed message from that PID/TopicPartition pair because the sequence

number starts at zero and increases monotonically. The producer resends the message if this is not the case.

The low sequence number is ignored by the producer, resulting in a duplicate mistake. Due to high sequence numbers, certain messages may be missed, resulting in an out-of-sequence error.

A new PID is assigned to the producer when it restarts. As a result, idempotency is only guaranteed for a single producer session. Although the producer retries requests in the event of failure, each message is only stored in the log once. Duplicates may still exist depending on the data source used by the producer. The producer’s duplicate data will not be handled by Kafka. As a result, you may need an additional deduplication system in some circumstances.

Pipeline Stages

In addition to mapping and filtering, you may aggregate, build queryable projections, frame the data based on event or processing time, and so on. The data flows through several Kafka topics and processing steps during the process.

The transactions are used invisibly behind the scenes when the Exactly Once processing guarantee setting is enabled on a Kafka streams application. The way you set up a data processing pipeline with the API hasn’t changed.

Transactions are challenging, but they are particularly problematic in a distributed system like Kafka. The important point here is that they’re working in a closed system, which means that the transaction is limited to Kafka topics/partitions.

Kafka Exactly Once Semantics: Ensuring Atomic Transactions

The Idempotent producer ensures that each partition receives an Exactly Once Semantics message. Kafka ensures atomic transactions in many partitions, allowing applications to construct multiple TopicPartitions atomically. As a group, all Writes to these TopicPartitions will succeed or fail.

The producer must be given a unique id, TransactionalId, that is consistent across all sessions of the application. Between TransactionalId and PID, there is a one-to-one mapping. When a new instance with the same TransactionalId comes online, Kafka guarantees that there will be precisely one active producer with that TransactionalId. Kafka additionally ensures that the new instance is in the pure state by completing (aborting/committing) all outstanding transactions.

Here’s a code snippet that shows the use of the new Producer Transactional APIs to send messages atomically to a set of topics:

{

producer.initTransactions();

try{

producer.beginTransaction();

producer.send(record0);

producer.send(record1);

producer.sendOffsetsToTxn(…);

producer.commitTransaction();

} catch( ProducerFencedException e) {

producer.close();

} catch( KafkaException e ) {

producer.abortTransaction();

}

}

Conclusion

Kafka Exactly Once Semantics is a significant improvement over the Producer, which was formerly the weakest link in Kafka’s API. However, this can only give to you with Kafka Exactly Once semantics are provided, it stores the consumer’s state/result/output.

If you have a consumer that, for example, makes non-idempotent database updates, there’s a risk of duplication if the consumer persists after the database has been updated but before the Kafka offsets have been committed. Alternatively, database transactions cause “message loss” and the program stops after the offsets have been committed but before the database transaction.

Hevo is a No-code Data Pipeline that is building a robust and comprehensive ETL solution in the industry. At a reasonable cost, you may link with your databases, cloud apps, flat files, clickstream, and other systems.

Visit the rest of the site for more useful articles!